Without going into too much detail, it suffices to say that the garbage collector of Ruby 2.0 fixes this, and we can now exploit CoW. An extremely simplified version is this - when the garbage collector of Ruby 1.9 kicks in, a write would have been made, thus rendering CoW useless. More accurately, the garbage collection implementation of Ruby 1.9 does not make this possible. Unfortunately, Ruby 1.9 does not make this possible. In theory, the operating system would be able to take advantage of CoW. So how does this relate to Ruby 1.9/2.0 and Unicorn? Only when a write is made- then we copy the child process into physical memory. Since they are exact copies, both child and parent processes can share the same physical memory. However, the actual physical memory copied need not be made. When a child process is forked, it is the exact same copy as the parent process. To understand why, we need to understand a little bit about forking. If you are using Ruby 1.9, you should seriously consider switching to Ruby 2.0. In this article, we will explore a few ways to exploit Unicorn’s concurrency, while at the same time control the memory consumption. Without paying any heed to the memory consumption of your app, you may well find yourself with an overburdened cloud server. Rails apps running on Unicorn tend to consume much more memory. Therefore, Unicorn gives our Rails apps concurrency even when they are not thread safe.

#Rails unicorn https apache code#

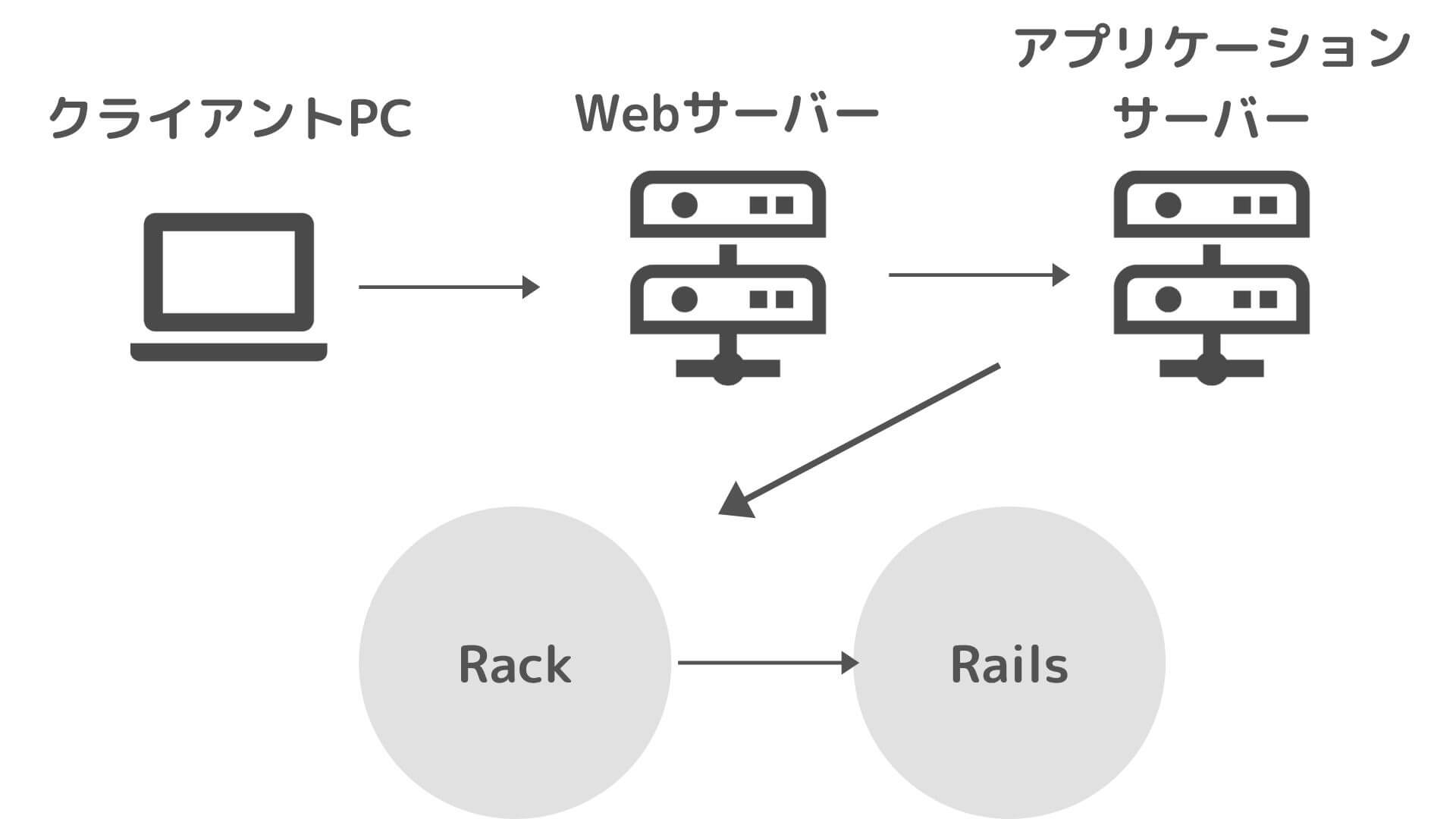

If we cannot ensure that our code is thread safe, then concurrent web servers such as Puma and even alternative Ruby implementations that exploit concurrency and parallelism such as JRuby and Rubinius would be out of the question. This is great because it is difficult to ensure that our own code is thread safe. Since forked processes are essentially copies of each other, this means that the Rails application need not be thread safe. Unicorn uses forked processes to achieve concurrency. If you are a Rails developer, you’ve probably heard of Unicorn, a HTTP server that can handle multiple requests concurrently.

0 kommentar(er)

0 kommentar(er)